Introduction

The GEMS sensor is made by Sentek Systems out of the Twin Cities. GEMS stands for Geo-localization and Mosaicing Sensor. It is mounted to the UAV in a Nadir position (Figure 1) and is very light, only 170g, so it can be used on a wide variety if platforms. It is composed of a 1.3 megapixel RGB and MONO camera which simultaneously collect images. Having its own GPS and accelerometer allow for image capturing that can be fully autonomous. All images are saved to a flash drive in a .JPG format for easy use in the GEMS software tool

The GEMS software is designed to make creation of image mosaics simple for the user.The software finds the .JPG files on the flash drive and creates "orthomosaics" in RGB, NIR, and NDVI formats. It also gives the GPS locations of the images and the flight path.

Software Workflow

When the user purchases the GEMS sensor they also receive a free version of the GEMS software. The software is very easy to use and I think has a very good user interface. To create mosaics from the .JPGs collected during a flight the user will complete the following steps.

1. Locate the .bin file in the Flight Data folder on the USB. The file structure on the USB is Week = X or the GPS week and TOW = Hours - Minutes - Seconds.

2. Once that .bin file is loaded you will see the flight path of the mission you are working with. (Figure 2)

3. Next before creating the mosaic you want to run a NDVI Initialization where the software runs a spectral alignment. This assure that the spectral values are consistent and the software is also choosing which NDVI color map best suits the given data set.

4. After the initialization is complete go back to the run menu and click on the generate mosaics tab. For most users a fine alignment is the best option. This will give you the best results.

5. Once the mosaics are created you can view them in the software by going to the images tab and selecting the mosaic you would like to view.

6. One last step that is optional is exporting the Pix4D file. This file contains all the GPS locations of the images which can be opened in Pix4D, an image processing software, where a 3D representation of the images would be created.

|

| Figure 2 This is the resulting flight path in the GEMS software when the .bin file is loaded. This is the flight from which all the imagery in figure 3-11 was derived. |

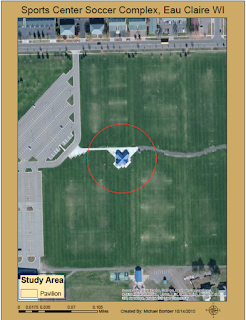

Generating Maps

Once the mosaic has run there will be tile a tile folder wherever you had the file location set. Inside these folders are a .tif file. .tif files have GPS location inbedded in them or they are georeferenced. This is nice because the user can then easily put them in ArcMap or a similar software and create maps with them. They will be laid on top of a basemap and be in the right place on the surface of the earth because of that GPS data they contain. This does not mean they are orthorectified however. In order for an image to be orthorectified it must be tied to a DEM or digital elevation model. This makes elevation correction in the image and then it will be a true representation of the surface of the earth. In the GEMS software you are not adding elevation data to these images so they are not orthorectified mosaics like they claim. In ArcMap the user can create maps of the different mosaics, edit the shape of the mosaic as they see fit and also pull the images into ArcScene which will give a poor 3D representation of the area in the image but that can be fixed it the user lays it on top of a DEM for the area. Figures 3-11 are the maps I created using the .tif files produced by the GEMS software.

|

| Figure 3 This is the RGB Mosaic |

The RGB mosaic is easy to compare to the basemap imagery provided be ESRI. If you were to zoom in it would become very clear that the image resolution of the basemap is not nearly as good as that of the data collected with the UAV. This increased pixel resolution makes NDVI analysis and looking at vegetation more accurate. The UAV image overall has much higher detail than the basemap. The GEMS sensor only has a 1.3 mega pixel camera on it so a with a camera like the SX260 which is a 12 mega pixel resolution you get even higher detail and an even clearer image.

|

| Figure 4 This is the FC1 NDVI Mosaic |

The color scheme for the FC1 NDVI shows healthy vegetation as oranges and reds. This is backwards to how most people think but from the image you can see that the grass areas are orange meaning they are healthy and the blacktop or concrete areas as well as the roof of the pavilion are blues and blacks meaning poor to no health. An NDVI is basically looking at how much water vapor the area is giving off. When plants are going through photosynthesis they have more moisture content and that is what the software looks at to create the NDVI.

|

| Figure 5 This is the FC1 NDVI Mosaic in ArcMap |

This version of the FC1 is slightly different than the one above. When I brought it into ArcMap I changed the color scheme for the reflectance values. This give you a better idea of the areas that are healthy and which aren't. Again red is healthy green is dead in this color scheme.

|

| Figure 6 This is the FC2 NDVI Mosaic |

FC2 is showing the reflectants level in the way most people are used to seeing them. Green is good and red is dead. This color scheme makes sense. You can see the same areas in this NDVI are green that were red on FC1 which means healthy area. The red area on this map are the same as the black or green areas on the FC1, The two maps are displaying the same values just in a different color scheme.

|

| Figure 7 This is the FC2 NDVI Mosaic in ArcMap |

I took the FC2 and changed the colors in ArcMap just as I did with the FC1. You can see there is better vegetation value variance in this color scheme than in the GEMS software image. ArcMap breaks the values down into more categories which gives you the wider range of colors in the map. Again red is dead and green is good.

|

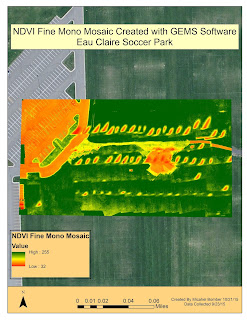

| Figure 8 This is the Fine NDVI Mono Mosaic |

The fine NDVI mono shows the reflectance levels as a high to low. The GEMS software does not assign a color scheme to this NDVI. Healthy high reflectance areas are white and low health is gray to black. This fine NDVI is better than just the mono NDVI (Figure 10) and by better I mean more accurate.

|

| Figure 9 This is the Fine NDVI Mono Mosaic in ArcMap |

This is that same NDVI in figure 8 only I assigned a color ramp to the values in ArcMap. The green areas are healthy and the oranges and reds are dead even the path which is yellow is also dead. The mono NDVI gives you the ability to choose which ever color scheme you prefer in ArcMap to display the data.

|

| Figure 10 This is the NDVI Mono Mosiac |

This is the normal mono NDVI. This one isn't as detailed as the fine mosaic. Again it is showing the reflectance levels. Below I brought it into ArcMap and changed the color ramp.

|

| Figure 11 This is the NDVI Mono Mosaic in ArcMap |

If you look in any of the maps above in the mosaic from the UAV you will see some striping that doesn't look quite right. The stripes run from the lower left to upper right of the images. This is an error in the mosaic created when the images were being stitched together. If you look very closely you can see that those stripes are where two or more images come together. This could be an error when the GEMS software ran the NDVI initialization and it is supposed to make all the values uniform. There are not big dead patches of grass in the soccer park.

Discussion/ Critique

Pros

The GEMS sensor and software package give the user a very simple and straight forward way to collect aerial imagery and also run simple analysis on the data. Its light weight, small size and being a fully autonomous sensor makes it ideal for a wide variety of platforms and situation.

The software is very easy to use and the user interface is very easy to follow. A person with very basic of UAVs and vegetation data analysis is able to use the software and produce useful mosaics. The file structure that is established while collecting data and running the analysis makes it very simple to stay organized and find the different file types created.

Cons

There are two areas that would greatly improve the usefulness of this sensor. First is the very poor pixel resolution. 1.3 mega pixels is terrible when thinking about how advance camera technology is. This low resolution limits what this sensor can be used for. The cameras may be sufficient for agricultural application however this sensor can and should be used in other industries. Surveying mines and doing volumetric analysis is one area that this sensor would be great with better cameras on it. In order to do the volumetric analysis you need very high detail images, 20 to 40 mega pixels. The SX260 camera which is a 12 mega pixel could also be used. This would not a hard problem to fix with the GEMS sensor. With phone camera technology as advanced as it is one of those cameras could easily replace the existing camera and make the unit more versitile. Another area when this sensor would be great to use with better cameras in search and rescue applicaitons. High resolution images are needed when you are looking for an object in the woods or elsewhere. This sensor is so light that it does not hinder flight time very much and with better cameras could be very useful in this industry. Even in agricultural applications better cameras would be ideal. Right now you can look at the overall health of a large area but in some fields of agriculture like vineyards they want to look at each individual plants' health. You need a much higher resolution camera in order to do that. My recommendation is that a camera of at least 12 megapixels be implemented into the GEMS sensor. This would greatly expand the areas in which it could be used.

The other area that needs improvement is also linked to the camera. The field of view for the present camera is only 30 degrees which is basically straight down. This reduces distortion in the images but it also makes the flight grids way too tight with the sensor. Close flight lines means longer flight times to cover small areas. Many platforms don't have flight time past 20 minutes to a half hour and when using the GEMS sensor they may have to do 2 or 3 flight to cover an area that could be covered in one flight with a different camera. I would suggest a camera with at least a 60 degree field of view. Some may argue that the images will be distorted but that is not true. If the image over lap is sufficient, usually about 75 percent, the distortion will be very minimal.

For the software the only suggestion I have for improvement is the file naming convention for when the flight were taken. The format as it is right now needs a online translator to get it into a date that the average person can understand. A format of Month-Day-Year and Hour- Minutes-Seconds would be much easier to understand.

In conclusion, I do like this sensor and software but my continued use of it will hinge on whether or not improvements to the camera are made. The present camera really limits what this sensor is used which is unfortunate because there are a lot of very convenient features that make this sensor a go to for imagery collection on a UAV.