Introduction

This weeks activity was all about conducting a flight with a Unmanned Aerial System. Last week we focused on the planning and preflight checks that need to be done before a mission but were unable to fly because of poor weather conditions. This week the students went through all the preflight and mission planning procedures again to emphasize safety and awareness of surroundings and then we conducted the missions they planned. I was the Pilot at the Controls (PAC), actually piloting the flights, for all of the mission with other students serving as the Pilot in Command (PIC) at the ground station and spotters. While I was working with students on creating mission to fly with the Matrix (Figure 1), Dr. Hupy was showing another group of students how to plan and fly a mission with the 3DR Iris (Figure 2) and Dr. Pierson was going over the basics of batteries with the other students. All the students rotated through each of these stations through the duration of the class. Dr. Pierson also brought along a RC plane (Figure 3) just like the one the students are currently building in class to show them how it flies and give them some tips. It was a busy class period but I think the students really gained a lot of knowledge through everything being hands on and interactive.

Study Area

The class met at the Eau Claire Indoor Sports Complex again at the soccer fields (Figure 4) as we have in week past. The weather forecast changed about 10 times so we didn't know if this activity was going to happen or not but the weather turned out to be perfect for conducting the missions. It was very cloudy and little bit hazy with a dupoint around 70%. Rain was expected sometime in the evening however we got class in before it rained. There was very little to no wind which is ideal for flying with UAS. The lack of wind also allowed Dr. Peirson to fly his RC plane.It needs to be almost calm to be able to fly because it is so small and light.

|

| Figure 4 This is the soccer complex here in town where we had class again this week as in weeks past. The red area is where the flights were conducted. |

Methods

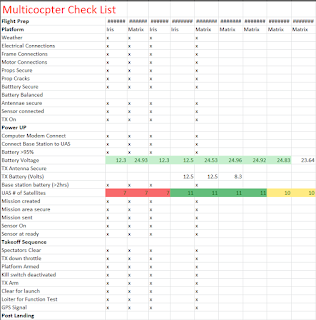

Many of the procedures this week were the same or very similar as last week because the students went through the pre flight and mission planning all last week we just didn't get a chance to actually fly. Please refer to my Activity 2 blog for the methods related to mission planning and the pre flight checklist. This week was a little bit different however because they were getting to see the sensors or cameras that take a Unmanned Aerial Vehicle (UAV) and make it an Unmanned Aerial System (UAS). An UAV with no cameras or other sensors to collect data is basically a glorified and really high end toy. You can fly them around but what is the point if you aren't collecting data of some kind to be used later. The real money in this field is in the data not in the platform, but anyway the students got some time seeing and working with the different sensors we use for collecting data. We used 4 sensors during class, GoPro HERO3, Canon SX260 and the GEMS censor from Sentek Systems in the Twin Cities. The GoPro (Figure 5) is a very easy way to collect aerial images and is especially good for capturing video is a follow me function kind of application.It is light and compact so it can be put on almost any platform however it can not be triggered it collect in continuous interval mode. It doesn't work very well when trying to collect nadir aerial imagery. The Canon SX260 (Figure 6) and S110 (Figure 7) are much better suited for collecting nadir imagery. They both geo tag the images as they are collected which works much better with the image processing software and they both can be triggered to take images or be on a time interval collection like the GoPro. They are 12 and 16 mega pixel respectively and have a fairly wide view which makes them good for increasing distance between flight paths but still getting enough overlap. The GEMS (Figure 8) is a custom sensor built by Sentek Systems out of the Twin Cities. It is a self contained unit that has its own GPS, accelerator and dual cameras, RGB and Infrared, which makes it ideal for collecting aerial imagery. The sensor is also very light allowing us to put it on our big platforms like the Matrix, a small Iris platform or even in a fix wing aircraft. On draw back to this sensor in the view width. It is very narrow and basically only gathers data directly below the sensor which increases flight time because the flight paths have to be so close together to get the proper amount of overlap. Better pixel quality from the cameras would also be a nice addition to this sensor. The ease of use is what has made this sensor our go to for data collection in the past.

Once the students got introduced to these different sensors it was time to fly. Four flights were conducted with the Matrix. Two were with the GEMS sensor and the other two were with the SX260 camera. One of the SX260 cameras we have is an infrared so one flight was RGB and then the same flight path (Figure 9) was flown with the infrared SX260. All of the data collected is on the computers at school and will be processed in classes later this semester by the class when we move from the data collection portion of the class to the analysis and processing portion of the class. The Iris was also flown by Dr. Hupy demonstrating the mission planning procedure for that platform and conducting an auto mission with the GoPro attached to gather the data, He also demonstrated some of the other functionalities of the Iris including the follow me function which is often used by people who are more interested in creating videos rather than collecting still images.

|

| Figure 5 This is the GoPor HERO3. We use this every once in a while for imagery collection but we have better sensors that are easier to work with when it comes to the imagery processing. |

|

| Figure 6 This is the Canon SX260 camera we use for much of our data collection. |

|

| Figure 7 this is the Canon S110 which is pretty similar to the SX260 but it is slightly smaller and is a 16 megapixels compared to 12. |

|

| Figure 8 This is the GEMs sensor made by Sentek Systems. You can see the two cameras side by side where it collects RGB and IR images simultaneously. |

Discussion

This exercise really made this class and material more interesting for me and from the comments I heard during class from other students they are starting to understand the different parts and procedures of this technology. This comes back to the hands on approach of this class. It was disappointing last week when we didn't get to fly however I think splitting the mission planning and pre flight up from the actual flying of the mission is a good thing. It gave the students two chances to really learn the procedures and it also is a test of how much they remembered from last week when they had to use those skills this week to do the flights. As the GEI technician and in doing my research with Dr. Hupy I have flown many flights but it is always exciting when we get the chance to go and fly. The weather and mechanical obstacles have often prevented us from being able to collect data but when we finally get a chance and it all goes smoothly it is fun. It is rare to find an occupation that is very serious and demanding but is also fun, that is what data collection with UAS is though. We strictly follow our safety procedures and we document all of our flights but at the same time it is fun because I enjoy what I am doing. I think this week the students got to experience that too. Last week was very serious about the safety and planning aspect and this week they got to actually see missions be flown which is fun and exciting, yet serving a good and serious purpose. The next couple weeks when we get to process the data is cool too because you get to see a final product of your work. The students will know how to go through the whole UAS process from pre flight all the way through data processing and analysis, which is a very valuable skill set to have once they are in the work place.